Do I Need Full Frame?

/Hi Readers! Thanks for stopping by.

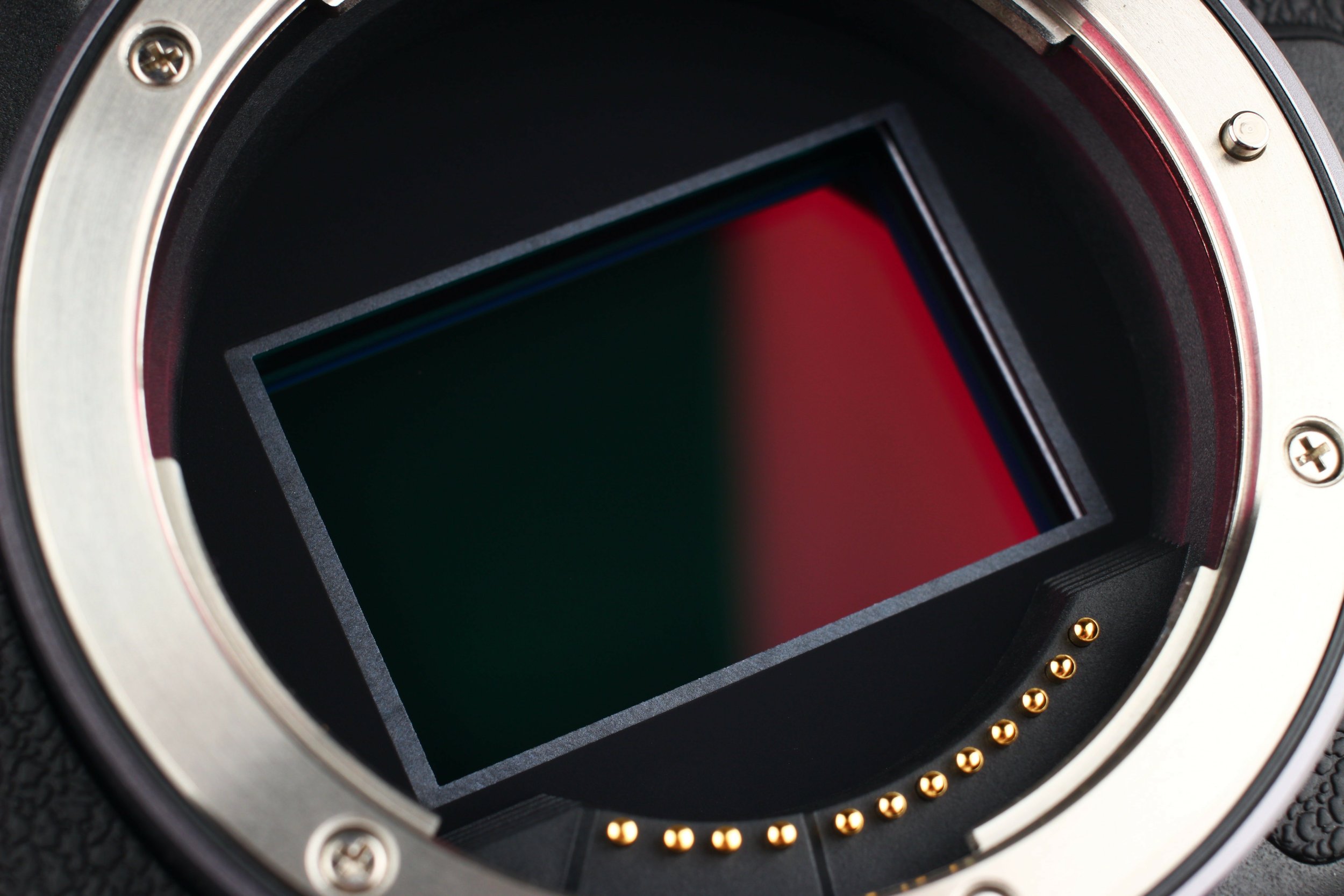

If there is one comment / question that never gets old is the one that goes along the lines of “should I go to a full frame sensor?” One could choose to be overly grammatical and suggest that all sensors are full frame since none are released to use less than their capabilities but this is not the actual question. The real question routes to whether you are better suited to a 36mm x 24mm sensor the same as an old 35mm film frame over something smaller.

The answer is always, it depends.

Perceived and Actual Benefits

If we think to film, we used larger negatives or transparencies in order to obtain higher resolution. This was highly driven, by the chemical nature of film grain. Film grain for a given film at a given ISO was the same size regardless of negative size, so if we wanted higher resolution, the only route available was a physically larger negative. While most of the world was content with 35mm, many pros of the time chose to go with larger negatives such as 6cm x 4.5cm, 6cm x 6cm, 6cm x 7cm, 4in x 5 in and 8in x 10in. Each step up required different lenses to create larger image circles and larger camera bodies to accommodate the larger negative frame. This meant more weight and bulk with the payoff of greater resolution.

However film grain size is no longer relevant. We now deal with pixels that record light. We are all experienced with the mostly foolish megapixel race that proposes higher resolution. This is not true because the frame size remains constant so the only way to get more photoreceptors into the same size frame is to make each photoreceptor smaller and to try to reduce the space between them. What we get is more pixels per unit area, which sounds equivalent to smaller grain in film, but it really isn’t. There are tradeoffs that each intelligent photographer needs to consider to make the choice that is best for his or her use cases.

Just like film, sensors do improve over time, so today’s 50MP sensor will deliver higher quality data than the first ones of about 10 years ago. The question of whether you can see it in your use cases is extremely important but often completely ignored.

A smaller sensor, all things being equal will have fewer pixels because its image frame is smaller. One then concludes that the resolution and image quality will be less and be less successful. While this sounds logical on its own, there are other factors being completely ignored, that make this automatic conclusion spurious. In a world where there were no other factors a larger sensor would have more space for receptors than a smaller sensor and could argue that it has more delivered resolution than a smaller sensor, just as 4x5 negatives had higher resolution than 35mm based on grain. There are however other factors.

Those Other Factors

First, we need to think about the surface area of the photo receptor. The larger the surface area, the more light gathering capability. In general, the more light gathering capability, the less power is needed to operate the photo receptor and the better the signal to noise ratio. As the count of photo receptors goes up in a fixed frame size, each on must therefore have a smaller surface area, so more power is needed to gather light at the same level as the larger surface area photo receptor. This increase in power requirements lowers the signal to noise ratio. We photographers see the results of this as the ability of a lower photo receptor count sensor to perform better in low light than a higher photo receptor count sensor. Of course sensor makers always work to improve their next generation of sensors otherwise they have nothing new to sell, and now that the number of actual sensor manufacturers has fallen, there are fewer actual sensor makers to drive this competition. Technically the higher photo receptor count sensor would have higher resolution but may perform at such a detriment in lower light that all you get is high resolution digital noise.

This is not speculation or opinion. It is scientific fact.

The Crop Sensor

What we refer to as crop sensors exist because when the first CCD based sensors were made, the quality was so questionable that it was impossible to consistently build full frame sensors without a bunch of dead pixels. Rather than do noting, the makers reduced the count resulting in a cropped frame. That problem was solved a long time ago and while full frame costs more to make, it does not cost much more. Makers now do crop sensors for other good reasons and create the illusion that full frame is always better by attaching a higher price to it. I mean they wouldn’t charge more for something based on marketing spin, would they?

How would you like a parcel of fertile land surrounded by water with lots of indigenous wildlife in a warm climate? Good. I have some swampland in Florida for you.

Crop sensors require a smaller image circle, which allowed manufacturers to make lenses with smaller elements. Since they wanted to maintain that full frame was better, some of these lenses were made very inexpensively substituting plastic for metal, even in critical areas like the lens mount. The big perpetrators of this were Canon with the EF-s family and Nikon with the DX family. Not all of the lenses were cheap builds but the better lenses were always the ones that were designed for full frame. Those designed exclusively for crop sensor confused the heck out of buyers because the full frame lenses used full frame focal lengths in their names, but the crop sensor lenses used crop sensor focal lengths in their names so an 18mm crop sensor lens was and is different from an 18mm full frame lens. Makers like Fujifilm only do the smaller sensor, so their focal lengths are clear and they don’t get engaged in any of this full frame equivalent measurement foolishness.

Because the sensor is smaller, the application of a lens generating a full frame image circle, results in the sensor using a smaller portion of the image circle giving the appearance of cropping in. This does not mean less resolution. If for example, the photo receptor surface area is the same on the crop sensor as on the full frame sensor, the resolution at the pixel level is identical. There may be fewer photo receptors in the frame, but the resolution is the same. This fact is regularly misunderstood and misrepresented. If I make an enlargement of an image made from pixels with the same surface area to provide the same level of paper coverage (meaning I have cropped into the full frame), the image resolution in the print is identical.

Using Nikon or Fujifilm crop sensors as examples, they are roughly 44% the total surface area of a full frame sensor. Now let’s assume that we have a full frame sensor and we are going to crop in to get the same angle of view as a crop sensor camera. If the crop sensor camera and the full frame sensor camera have identically sized photo receptors, then to match the resolution of a 20 million receptor crop sensor, I would need a 45 million receptor full frame. Plainly if I crop in a lot, a larger sensor serves me best, but if I do my job as photographer and fill the frame, a crop sensor camera that fills the frame is a better choice numerically than a full frame that I have to crop to fill the frame. In this example then the diagonal measurement is about 1.5x smaller on the crop sensor than the full frame sensor or we say that a 100mm full frame lens on a crop sensor would look like a 150mm lens on a full frame. I think such mathematical gymnastics are pointless but lots of folks worry about such things.

Micro 4/3 Sensors

The M43 standard uses a smaller sensor still. It’s overall surface area is 25% that of a full frame sensor. This sounds horrible if you think in terms of fixed film grain sizing but that is a silly argument. If I have a fill frame sensor with 40M photoreceptors and a M43 sensor with 40M photoreceptors then we know because we are measuring in two dimensions that the size of each photoreceptor on the M43 sensor is going to be about 1/4 the size of the size on full frame. Optically this would be much the same frame resolution but the M43 sensor is going to be a lot noisier because of the smaller photo receptors. However, if I use the same size photoreceptors, a M43 sensor of 20 million photoreceptors that would be the same as a full frame sensor of 80 million photoreceptors. Again if I am not cropping in , the larger sensor would have more resolution but if I am doing a lot of cropping in on full frame to match the frame fill of a M43 sensor, I will be ahead of the game with the smaller sensor because the size and cost of the lenses will be less. To engage in the mathematical gymnastics, we say the the diagonal measurement is 2x small on the crop sensor and so a 300mm full frame lens if mounted on a M43 camera would have the angle of view of a 600mm lens on a full frame camera

Aspect Ratios

The aspect ratio is expressed as the ratio of long size dimension to short side dimension . For full frame and APS-C crop sensors this is effectively 3:2, so a print of everything captured will have the same aspect ratio. Thus a print from either of these sensors would be 12x8. This means that the too common 10x8 paper or frame is cutting away part of the image. That’s ok if you planned for it, otherwise it is a pain in the butt.

In the case of M43 it is unsurprising 4:3 That makes the overall image less rectangular than the other sensor but is also means that on the common 8x10 print or frame, the native print size would be 10.7x8, so another involuntary crop.

This may cause you to ask what matches a 10 x 8 frame. Anything with a 5:4 image ratio, such as a 4x5 or 8x10 sheet film negative. What? You don’t have that? Right you are. Frame makers have been screwing us over for 70 years making frames that don’t actually fit our images. That is a different rant.

The Missing Link

Thus far we have looked at the sensors only. While this makes the learning simpler and is quantifiable, what we have left out is that there is more to digital photography than the sensor. You can get pretty decent looking images out of your smartphone despite it’s microscopic sensor because over 85% of the image happens in image processing before storage. You know of this as computational photography or what you see is not what you get. What you get is the result of a set of algorithms programmed to deliver a set of predefined optimal results. Multiple frames used to refine focus, tiny focal lengths to depend on using depth of field to correct for missed focus, filters to make blurry that which was not, colour enhancement, saturation and vibrance enhancement, image stacking and myriad other functions that happen without your knowledge or involvement. No one complains because they like the results.

Well folks, there is nothing pure about your digital photography with your DSLR or mirrorless either. “I shoot RAW” is just a slogan that has limited meaning anymore because every maker does preprocessing in camera BEFORE the RAW fill is written to memory. You do not know what is being done, the makers do not tell you, and you have no choice in the matter of whether it gets done or not. Suck it up buttercup, RAW is not actually RAW, it is the equivalent of genetically modified food. Whether that bothers you or not is your choice because it does not matter, since can neither turn it off, nor turn it on.

The in camera JPEG is something that you can control, mostly. However this is also changing. Many cameras today do pre-shooting, holding frames in memory on the shutter half press, dumping them if you never release the shutter but using them as a tool to allow for the “best” image when you do. Or the camera may offer in body focus stacking where the camera takes a series of images and the blends and masks them to produce a final image that has more depth of field than any one shot could.

These tools can be huge benefits to the photographer so long as you know that you didn’t make the image, you were just there to push the button. There is already a discussion on copyrights and AI going on. with folks pondering whether the AI or the person holding the camera owns the copyright. I avoid these discussions mostly because I don’t care, but there are very serious and very intelligent people thinking about this. Will a self aware AI demand its copyright? Probably right after it decimates all humans.

Wrapping It Up

What I hope that you can take from this is that the argument of what sensor size is best is a waste of time as a generality. What is a fair analysis is to determine what sensor size best suits you in your use cases along with all the considerations of cost, weight, portability and the like. So what sensor size is the most popular? Being a photographer is an act of self declaration. I might call myself a photographer and you might think that I’m a hack and we would both be correct. So if I follow the context of self-declaration, the most popular sensor size is that of a three generation old smartphone. And on that thought, I will go get a beverage.

Please subscribe to be notified when new content is posted and thanks for being part of The Photo Video Guy community. Until next time, peace.