Answering a Sensor Question

/Hello all. Reader Dean sent me a direct question via the link here and after replying, I thought the concepts would be a decent post, so here we go.

Overview

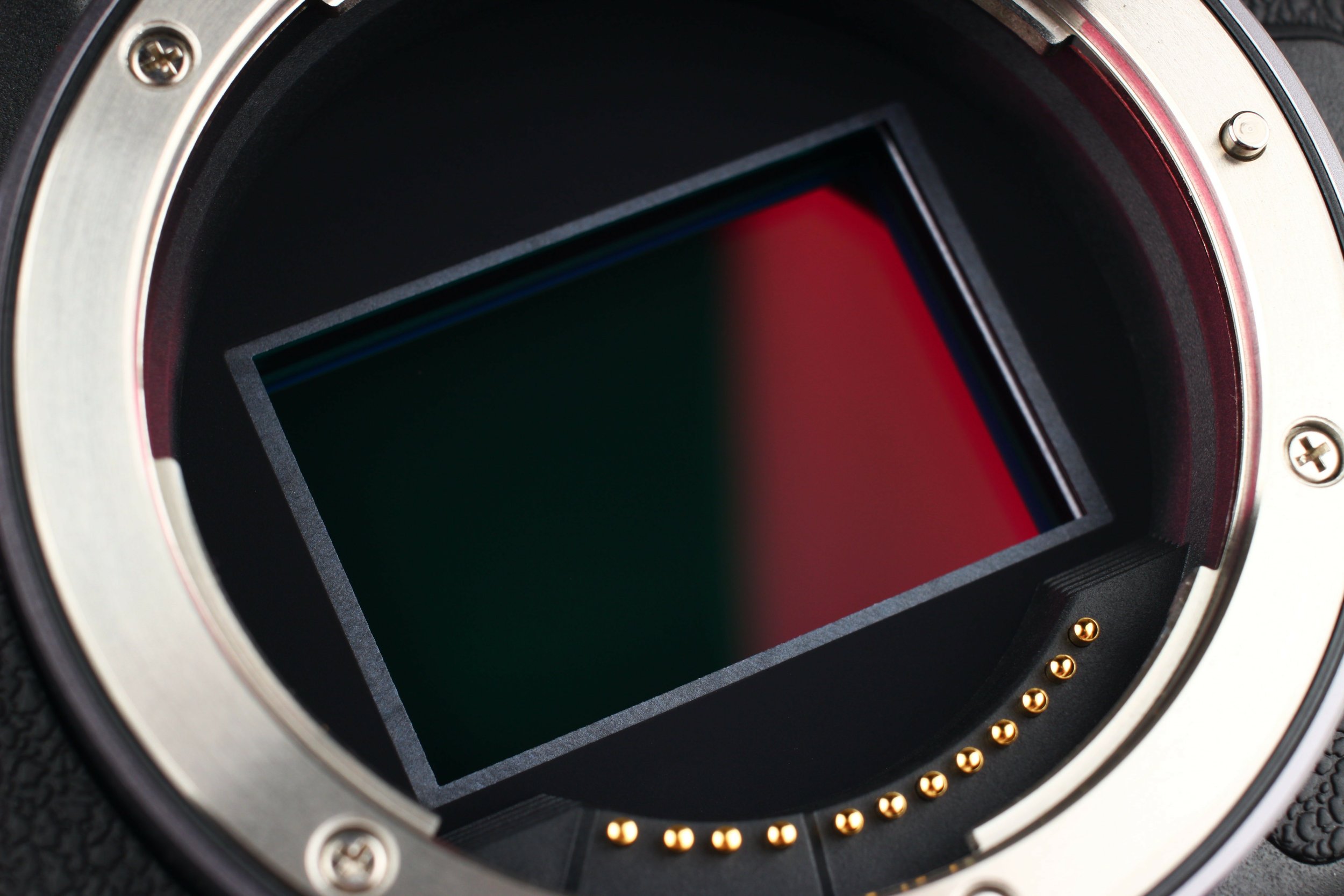

When we talk about sensors, as you well understand, we are actually talking about a collection of photoreceptors arranged in a quad using a Bayer model based on the light bias of the human eye. So in each quad, we have two receptors (pixels) that “see” green, one that sees read and one that sees blue. The surface area of the individual receptor has a significant impact on data gathering. The larger the surface area, the better the pixel can gather light, meaning that with the same voltage applied, more light is gathered by a pixel with a larger surface area, than one with a smaller surface area. This efficiency means that a lower voltage requirement for a given light level means a superior signal to noise ratio because as we increase voltage to the receptor, we also increate noise. This is shown by comparing two sensors of the same physical dimension, let’s use the crop sensor model as an example. A 20 million pixel sensor covers the same area as a 50 million receptor sensor, but because the surface area on the lower receptor count sensor is larger for each pixel, we see better performance in low light / high ISO. This is basic science.

That you see good images from smaller sensors is no surprise. Consider a sensor capable of a 40 megapixels receptor count such as the Fujifilm T5, an excellent product. In the space of 23.5mm x 15.7mm using a 3:2 aspect ratio, we count 7728 pixels wide by 5152 pixels tall. This data comes directly from Fujifilm.

The math thus tells us that there are for all intents and purposes 328.85 pixels per millimetre on the sensor. As we measure screen and print resolution in inches, we can do the metric to imperial conversion and discover that this would be 8352.8 pixels per inch. This is as expected from the data, but many people are astounded to learn that the PPI count is so high.

Can We See the Real Resolution on a Display?

Now if we were to assess our standard common Full HD display, aka a 2K display, we would find that the display shows a resolution of between 72 and 80 pixels per inch, depending on certain build characteristics. As the math is linear in one axis, a 4K display would then provide between 144 and 160 pixels per inch resolution. It is easy to see that the sensor from the example T5 has much more resolution than a quality 4K display by a factor of over 52x. We may tell ourselves that we can see a difference between a crop sensor at 20MP and a crop sensor at 40MP, but we are deceiving ourselves. NO display comes anywhere near the resolution of any sensor. Even the 12MP sensor in a smartphone has much more resolution than any display currently available.

Can We See the Real Resolution on a Professional Print?

When we talk about prints, it is common, although not necessary, for people to think in terms of dots per inch. Dots represent pixels, but printers receiving a 300 DPI file will do interpolation and actually deliver often more than 1440 dots per inch. This is significantly superior to any display but still far less than what the sensor delivers. From this we can rightly conclude that the only rationale for large megapixel counts is the required deliverable of massive prints because no screen on the market can show the actual sensor resolution.

What About Larger Sensor Systems?

When we move to medium format, we get pixels with a larger surface area. This means better signal to noise ratios which can deliver a better looking image than from a smaller sensor. If we consider the Fujifilm 50S, another excellent camera, we find a sensor defined in a 4:3 aspect ratio that delivers 8256 pixels across 43.8mm across and 6192 pixels across 32.9mm down. Converted to pixels per inch we find 4787.7 pixels per inch. That is a much smaller number of pixels per inch than on the T5, so one could say correctly that the pixel resolution of the medium format sensor is LESS than the crop sensor. However, the surface area of each pixel is much larger and so has superior data gathering capability and superior signal to noise over the crop sensor. Thus for the same size print at the same viewing distance, we would perceive that the image from the 50S is “better” than the image from the T5, even though the T5’s technical resolution is greater.

Regardless, the 50S sensor resolution is still far and away greater than any 2K, 4K or even 8K display. We cannot on any display see the actual capability of that sensor. This is a fact of science, and sadly marketing ignores science on a regular basis.

There is a difference in other medium format digital cameras. First the sensors are larger physically than in the Fujifilm whose size is not “true” medium format. Compared to a Hasselblad or Phase One, the sensor is quite a bit smaller. More important is that the Fujifilm sensor in the 50S supports a maximum of 14 bit colour, the same as the T5, whereas the Hasselblad and Phase One sensors support 16 bit colour, and that difference is substantial. 14 bit colour gives 16384 possible values for each of red, green and blue, which candidly is wonderful. However 16 bit colour gives 65536 possible values for each of red, green and blue. This is a true 16 bit colour space as noted in the specifications for what is called Adobe RGB. A much wider colour palette than 14 bit. For those of us shooting what we commonly call full frame, crop sensor or micro four thirds. we get 14 bit RAW, although some cameras offer a 12 bit RAW option. It is important to recognize that JPEGs are all 8 bit colour, fine for most displays but a significant loss of colour range if JPEG is the default capture format.

When we come back to our incredibly low res displays, most are only capable of 8 bit colour (sRGB) and the screen cannot represent either 14 bit or 16 bit colour properly. A display built to deliver Adobe RGB can show the difference but again the resolution is so low it is effectively undetectable. You can see it in a print IF the printer can accept a 16 bit print job. This means using a local photo printer or using a service that will accept a 16bit TIFF file. Prints from JPEGs are ALWAYS sRGB and 8 bit and so again, the value proposition is lost in translation.

This does not mean that these displays or prints from JPEGs look bad. They simply do not deliver what the camera recorded in the RAW file and cannot represent that data in terms of real resolution and potentially bit depth.

Fujifilm made a change in the new GFX 100 S II in that the sensor will now offer the option to record in 16 bit RAW, like a Hasselblad or Phase One. This brings the capability for true 16bit colour gamut, but the limitations of displays and prints still apply.

I do not doubt any belief that you can see a difference between the prints at 17x22 between a crop sensor and the Fujifilm 50s sensor. Beliefs are a powerful thing, even when not supported by the science. Thus I don’t get into beliefs or perceptions in an analysis, and stick to the math because it is definitive.

Wrapping Up

The only thing that really matters is if you are happy with your work. If you are, the rest is noise. Some of my favourite photographs ever made were done on low performing lenses on film with only five stops of dynamic range. The technical is interesting, but if the image does nothing for me, I move past it and never think about it again. You are your own person and should do what pleases you.

Please become a member on Patreon to help support this channel. A big thanks to all the existing Patreon members! Send in comments or questions, I read and respond to all. If you shop with B&H Photo Video, please use the link on the main page as it pays me a small commission and does not cost you anything to do so. Thanks again and we will see each other again soon.